How the pharmaceutical industry can meet the challenges of data quality management

Pharmaceutical companies rely heavily on huge amounts of data that is used in all processes, from interpreting clinical findings to measuring the effectiveness of drugs based on real-world health outcomes. In addition, continuous compliance with increasingly rigorous standards and changing legal regulations must be maintained. All this requires constant data quality monitoring since even a small mistake can result not only in legal consequences or reputation damage, but above all it may pose a risk to the health and lives of patients.

Topics related to data quality assurance in the pharmaceutical and life science industries were the main theme of this year’s Digital Future Day which took place on 25 October 2019 at the Swiss headquarters of Striped Giraffe in Zurich. The meeting was attended by several representatives of the largest Swiss pharmaceutical and life science companies.

This year’s event in Zurich demonstrated huge interest for emerging digital technologies in the area of data quality analysis and assurance. In his welcome note, Igor Kleiman said that data quality has to be considered being crucial standard building an important foundation for the company’s market value. This standard needs to be actively managed and supported — he emphasized.

Why is data quality that important?

Let’s take a look at a few figures from the current market research.

- 8-12% of the operational profit are getting lost due to insufficient data quality (T. C. Redman, Pres. Of Navesink Consulting Group)

- Bad data driven costs mounted to 16% of the US GDP in 2016 (Assessment of IBM Group)

- Companies maintaining high data quality standards tend to have twice as high growth rates as the sectoral average (Boston Aberdeen Group)

The issue with instantly growing data

— On average, the data volume in the most industries reduplicates every two years — claims Mr. Kleiman. — The challenges caused by this fact need to be addressed not only from the perspective of the corresponding IT systems but rather from the perspective of the data management.

Even master data are subject of a permanent change. Customers might have moved to another place or might have changed either their marital status or their credit card institution. Also, missing data as well as duplicates jeopardizing data consistency and data integrity. On average, 20%-30% of commercial data are impacted by those issues causing significant direct and indirect costs.

The problem of the increasing amount of data to be collected and monitored has not spared the pharmaceutical industry either. In the last couple of decades we’ve seen an explosion of information sources available about products, constituent substances, suppliers, organizations, markets, customers and so on.

Maintaining the high quality of this massive amount of data, ensuring its consistency between various IT systems within the company, as well as full compliance with all applicable standards and regulations are currently the most important challenges for the pharma industry in terms of its digitization strategy.

Krzysztof Wiśniewski, Senior System Architect DWH & BI, Striped Giraffe

Data quality in the digital reality

During the event, the main presentations on various aspects related to data quality in the pharma industry were given by our supreme expert Krzysztof Wiśniewski, Senior System Architect DWH & BI, who has recently been involved in several data quality projects for some of the renowned European market leaders. Mr. Wiśniewski discussed the most important issues concerning data quality assurance in today’s digital reality as well as the importance of developing data quality solutions and procedures as the main factors determining the success of the digital transformation in an enterprise.

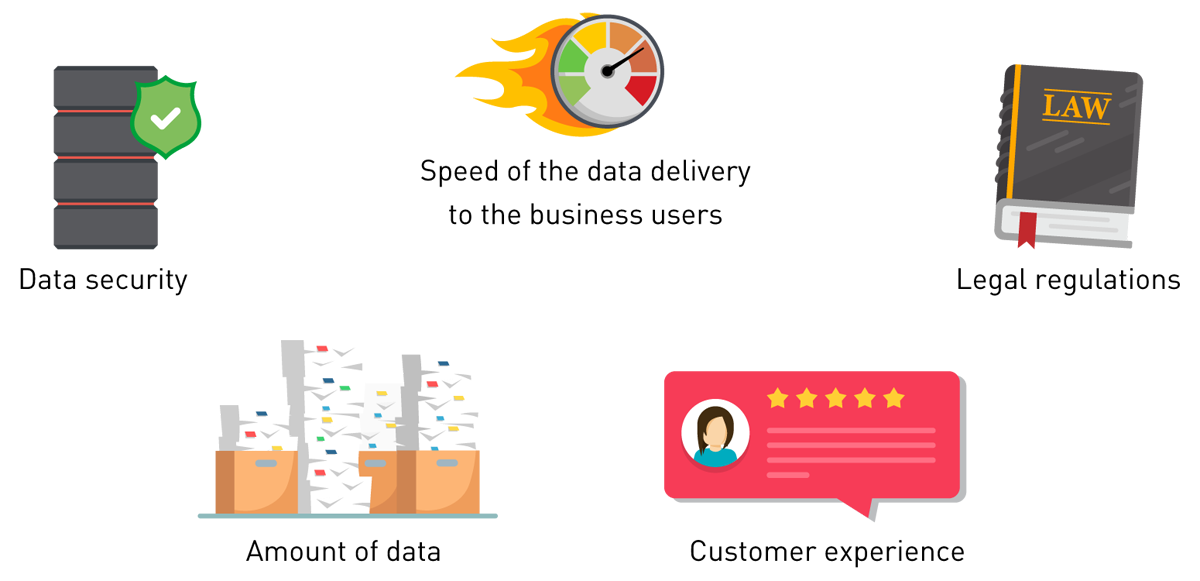

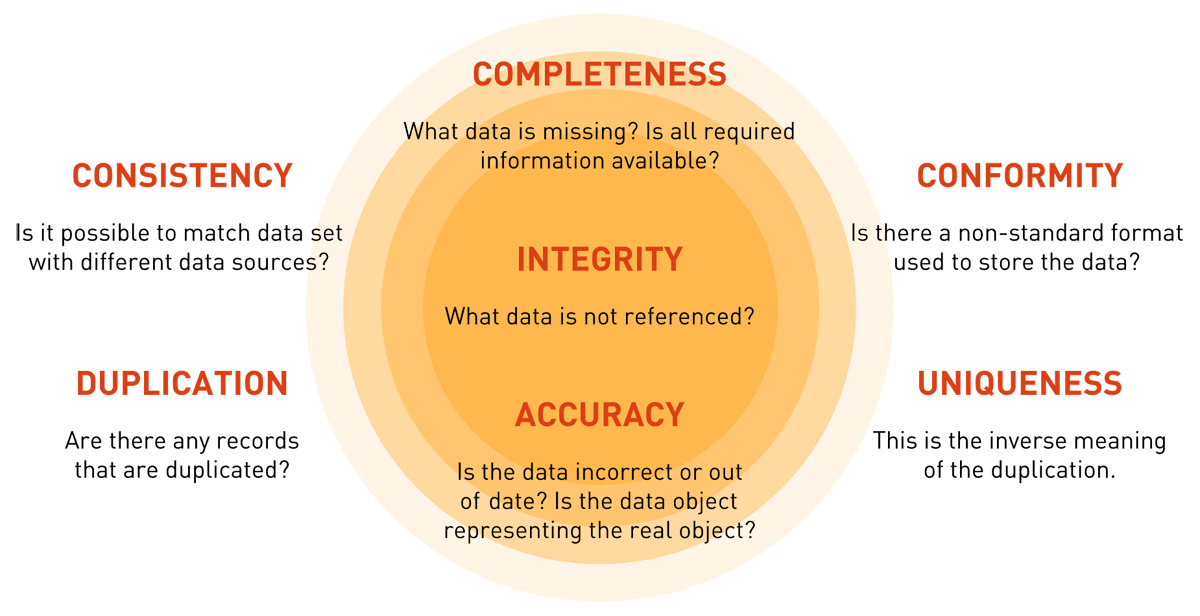

Figure 1 — Top factors impacting data quality.

Participants were provided with the expert knowledge on the data quality-related topics such as:

- Benefits of data quality improvement — for example, reducing costs (e.g., of marketing campaigns or customer support), process automation, preventing the distribution of poor-quality data between systems, or improving customer satisfaction and experience),

- Data-driven and process-driven strategies for improving data quality,

- Top factors impacting data quality, including data security, speed of the data delivery to the business users, legal regulations, amount of data, and customer experience (CX),

- Challenges in data governance programs, e.g. defining the data governance policy, determining the data that must be under governance, deciding about architecture and tools, or defining KPI, metrics and reporting strategies,

- Sources of bad data, such as human mistakes, no data strategy or bad data strategy, no cooperation between data consumers and data publishers, or no cooperation between business and IT departments,

- Data quality dimensions, including consistency, completeness, integrity, conformity, uniqueness, duplication, and accuracy,

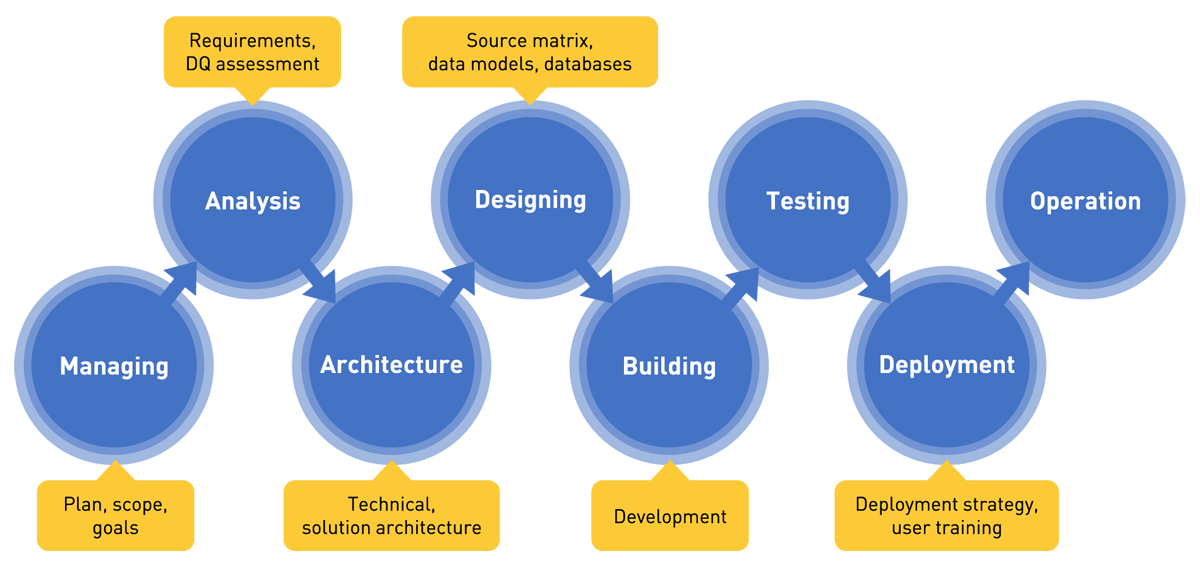

- Data quality project phases — from managing, through analysis, architecture, designing, building, testing, to deployment and operation,

- Various roles in a data quality project.

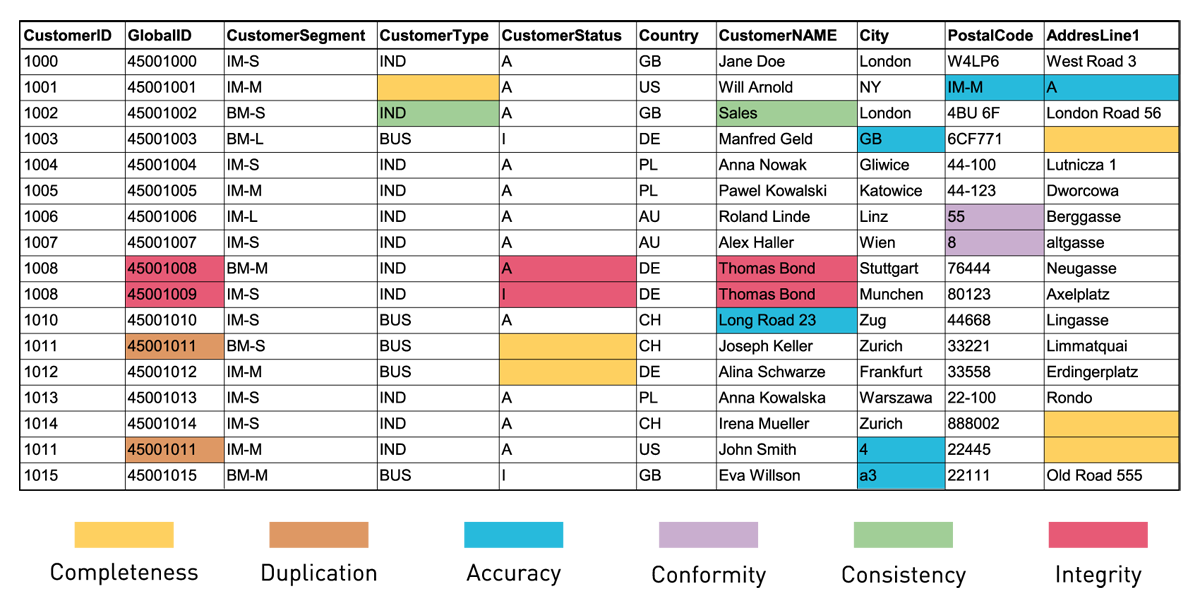

Figure 2 — Data quality dimensions.

Data quality in practice — dedicated tools and customer success stories

Presentations of two projects implemented recently by Striped Giraffe for the pharmaceutical industry aroused great interest among the participants of our meeting in Zurich.

The first project being discussed was aimed at improving the quality of regulatory data stored in the Regulatory Information Management System (RIMS) due to the planned migration to the new platform. The solution implemented makes it possible to constantly monitor and maintain the high quality of data by utilizing reusable data quality rules and automated workflows. The goal of the second project was to develop a solution for data quality checks between the European Medicines Agency’s IDMP-SPOR data management services and the key company’s systems that store and process data required for compliance with the ISO IDMP standards.

Figure 3 — Examples of various data quality problems.

Both above mentioned projects involved the data quality-dedicated Informatica tools, including Informatica Data Quality, Informatica Analyst, and Informatica Developer. The participants were impressed by the live presentations of these powerful tools, especially when Mr. Wiśniewski explained the way these solutions enabled and supported efficient cooperation between analysts and developers. During the presentation, the attendees were shown how data quality rules and data quality check workflows can be designed and developed using a clear and intuitive graphical interface with drag-and-drop features. The participants were also curious about the possibility of defining human tasks within the fully automatic workflows, if needed.

Figure 4 — Data quality project phases.

The RIMS Data Quality project aroused particular interest. The attendees highly evaluated the way in which the final results of the quality audits — i.e. data quality issues — are presented to end users. In the solution developed by Striped Giraffe, the end user gets a direct link to the appropriate Product Detail Set (PDS) in the RIMS system where a data quality problem has been detected. In addition, the user is provided with all the necessary information about the medical product affected by the issue as well as the information on where — i.e. in which guide or manual — to look for tips or guidance explaining how to solve this particular problem. All this information is provided in a clear and condensed form on a dedicated screen.

Data quality challenges

During the panel devoted to the challenges related to assuring high data quality across the entire company, an interesting discussion was held on whether and how pharmaceutical companies measure the economic consequences of various data quality errors, such as the occurrence of a large number of duplicates in the customer database. This may result in, for example, sending thousands of unnecessary emails or letters during a marketing campaign what — in turn — translates into higher costs, but may also cause severe reputation issues (since one customer will receive the same information or requests several times, or will be almost “stalked” by call center employees calling over and over again on the same matter).

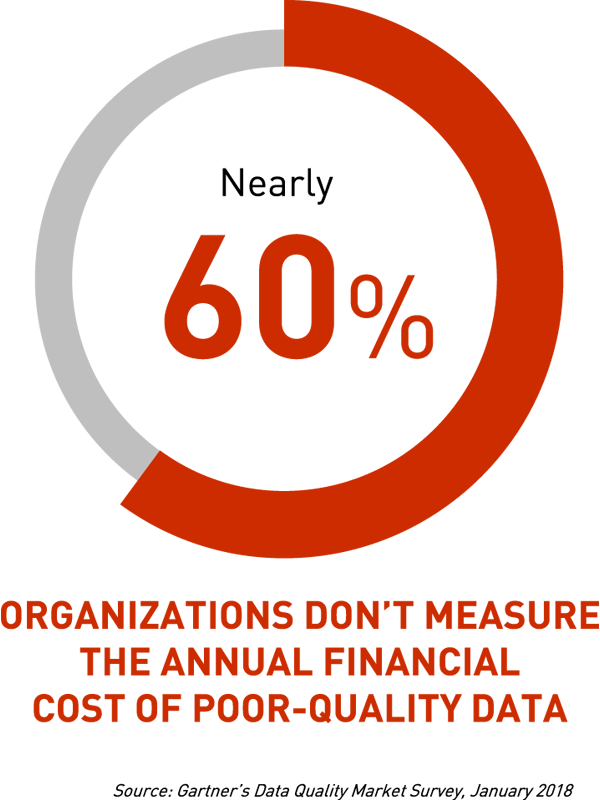

During the discussion, it turned out that still a small percentage of pharmaceutical companies monitor and try to measure the financial consequences of incorrect data on an ongoing basis. This state of affairs is also confirmed by Gartner’s market research:

Figure 5 — 60% of organizations don’t measure the annual financial cost of poor-quality data.

During this part of the event, attendees had an opportunity to discuss with our experts such topics as:

- Organizational challenges — including setting up the data quality project, understanding the financial cost of bad data, as well as that data quality impacts reputation, challenges with cross-department cooperation in huge companies, overestimating the amount of time required to implement data quality programs, lack of knowledge about data quality projects, or no data quality roles defined in the organization,

- Challenges during a data quality process,

- Challenges when setting up a data quality project, e.g. analyzing the business processes, performing data assessment, determining responsibilities and roles, defining the terms, thinking about how the company understands the data of good and bad quality, creating the rulebook, findind the data that can be used in reference tables, assigning the rules to the data quality dimensions, or creating the draft of the scorecard / KPI dashboard,

- Technical challenges — for example, analytical data is different compared to the transactional data, not all data may be relevant for a particular purpose, understanding that data lineage needs to be a living process and must be updated as systems and processes change, the ability to track and audit data lineage should be available on demand and should be a part of the data quality solution, encoding and character sets, multiple languages, or different units of measures,

- Various challenges when designing and building data quality rules, e.g. how to define complex business rules with several combined conditions, how to include regulatory constraints, how to set a given range of values or verify if values in the column are of a given data type, how to create and use regular expression patterns like email address validation, etc.

In order not to leave all these topics without their conclusions, the panel leader Krzysztof Wiśniewski presented his recommendations on how to solve the issues discussed during the entire meeting. Participants had the opportunity to learn — for example — how to plan and carry out a comprehensive data quality project, organize and execute a continuous data quality process in the enterprise, or avoid the risk of bad data, and in case of its occurrence — how to effectively fix it.

The benefits of high data quality

For a company, having invested into a strategic data quality improvement program could mean:

- At least 5% savings on labor costs,

- Building strong foundation for digital transformation initiatives,

- Ensuring accurate reporting results and leveraging correct management decisions,

- Improved and successful marketing campaigns,

- Higher conversion factor within e-commerce solutions,

- Optimized stock management costs and improved supply chain management.

The general conclusion of the discussion panel was that data quality has not yet reached the point where everybody in companies understands how important it is. This is specifically applicable for management. But in fact data quality forms the base for many other operations, and ultimately has a big effect on customer satisfaction. Most likely the push for focus on data quality won’t be initiated by the management within companies, but the importance has to be flagged by those working with data.