RIMS Data Quality Project for a Leader in the Life Sciences Industry

Customer

The customer is a leading science and technology company that operates across healthcare, life science and performance materials. The company employs more than 50,000 staff members and maintains its presence in more than 60 countries. The customer provides solutions that make a positive difference to millions of people’s lives every day by creating more joyful and sustainable ways to live. From advancing gene editing technologies and discovering unique ways to treat the most challenging diseases to enabling the intelligence of devices.

Challenge

The customer wanted to improve the quality of data stored in their current Regulatory Information Management System (RIMS) due to the planned migration to the new platform. The company decided to implement a universal data quality solution that would initially be used to verify the RIMS data, but in the future could act as a solid base for continuous improvement of data quality in their other internal IT systems.

One of the most important requirements for the new solution was to support the integration of multiple sources of data related to various aspects such as regulatory affairs, pharmacovigilance or product dictionary management. By integrating and improving the quality of these sources company wanted to enable data standardization, review, cleansing, remediation, and exception management in a systematic way.

Another significant need was to automate the data quality checks. So far, the entire process has been performed manually and involved authorized customer employees at each stage, including checking data and finding issues, analyzing them, and finally correcting relevant records in the system. Automating at least some of these tasks should reduce data quality correction cycles and operational efforts, and thus enable company to focus more on strategic goals.

Solution

Before starting the project, our customer evaluated various vendors and their solutions to finally choose Informatica as one of the most mature and advanced tools in the field of managing data quality across the multinational enterprise. Informatica not only met all the requirements but also had a proven track of successful implementations in the pharmaceutical industry. The fact that for more than a decade Informatica has remained a leader in Gartner’s Magic Quadrant for Data Quality Tools was also an important aspect for the customer when choosing a winning solution.

To build a comprehensive solution to identify data quality issues in the RIMS system, the company partnered with Striped Giraffe which had extensive experience in data-related projects.

Striped Giraffe experts helped customer to design the solution architecture which successfully utilized the newly acquired Informatica software — i.e. Informatica Data Quality as well as other tools already owned by the pharmaceutical giant, including Microsoft Sharepoint and K2 SmartForms.

In simple terms, the new solution works as follows. A dedicated interface extracts data sets from the RIMS system and then Informatica Data Quality verifies whether the data sets conform to specific data quality rules. If not — that is, there are records that don’t meet the requirements — the system marks them as “bad quality” ones. Next, a notification is sent to the entitled company employee who either corrects the data in the RIMS system, or indicates it as a data quality exception and leaves it unchanged. Quality assessments can be initiated manually, but in most cases they are executed automatically according to scheduled cycles and strictly defined workflows.

Product Detail Set entity includes all the information which is relevant for the product, under the scope of the registration and regulation in place. It contains the information such as: active ingredients, manufacturers, characteristics, labels, indication, intended use, packaging etc.

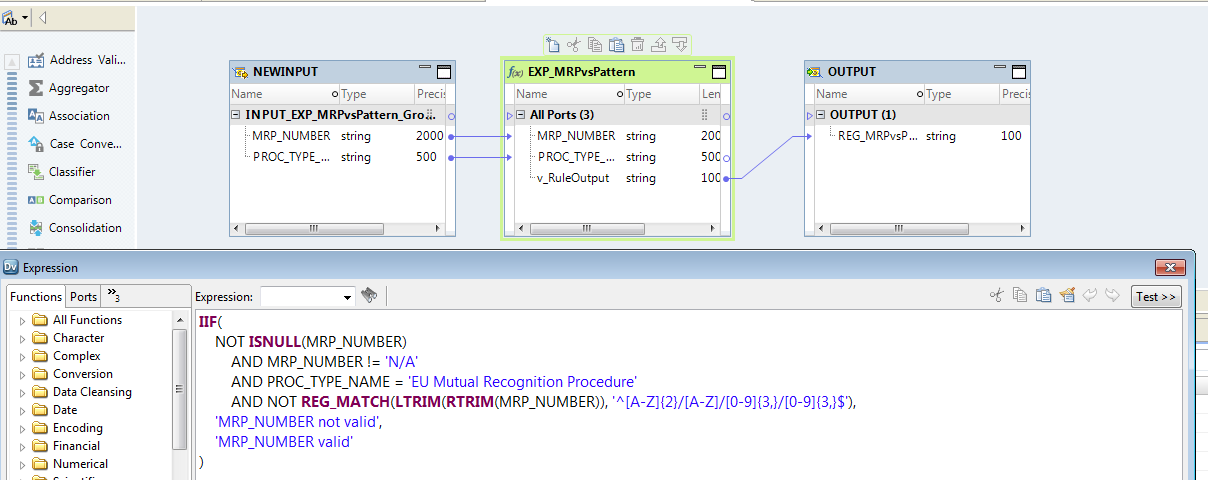

The key elements of the new solution are the data quality rules that can be either simple and consist of a single condition, or very sophisticated and include complex logic with multiple conditions.

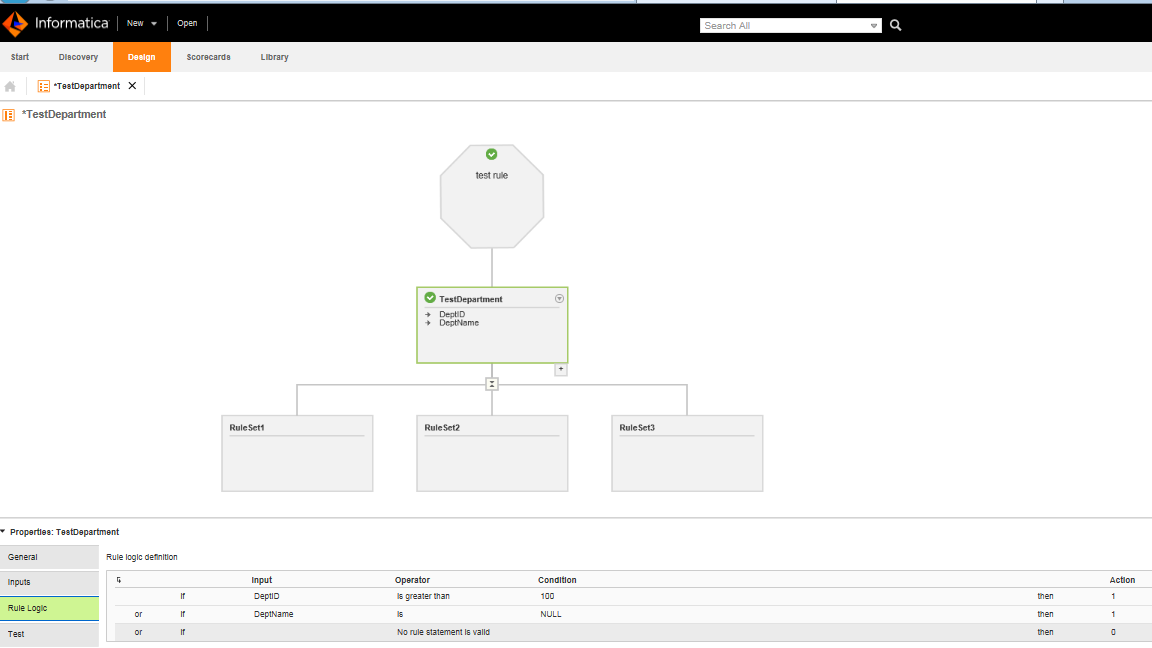

Data quality rules can be managed — in two ways: by a programmer in the Informatica Developer tool, or by a business analyst in the Informatica Analyst application where they can design and configure rule specifications in a user-friendly graphical interface. The way in which rules are created is very flexible and versatile. When defining a rule, you can include texts, numbers, dates and other formats of data used in IT systems. Furthermore, the solution supports different kinds of checks, logical operators, check against dictionaries and controlled vocabularies, as well as combinations of multiple conditions.

Fig. 1. Data quality rule configuration in Informatica Developer

Fig. 2. Graphical interface for designing data quality rules in Informatica Analyst

Each single data quality rule must have one data owner assigned. This is a customer employee who is notified when “bad quality” records are identified as a result of applying a specific rule to a given data set.

The results of data quality checks are presented to data owners as categorized lists of issues. The lists are created in K2 SmartForms and displayed on dedicated web pages. Here, a data owner is provided with detailed information on the issue, e.g. which data quality rule was violated, what data record was affected (with a direct link to the record in RIMS), what the problem was about, and how it can be solved. So, the solution not only notifies a data owner about the data quality violation, but also provides them with ongoing advice, hints and support in solving the problem.

The data owner is responsible for manual verification of the “bad quality” data and fixing the issue. They may do this in two ways. First, they can correct “bad quality” records directly in the RIMS system. Secondly, if they recognize the record as the “good quality” data, they can mark it as an exception and close the issue. It must be pointed out that the definition of each data quality exception is automatically stored in the system to prevent the same incident being reported in the next data quality assessment cycle.

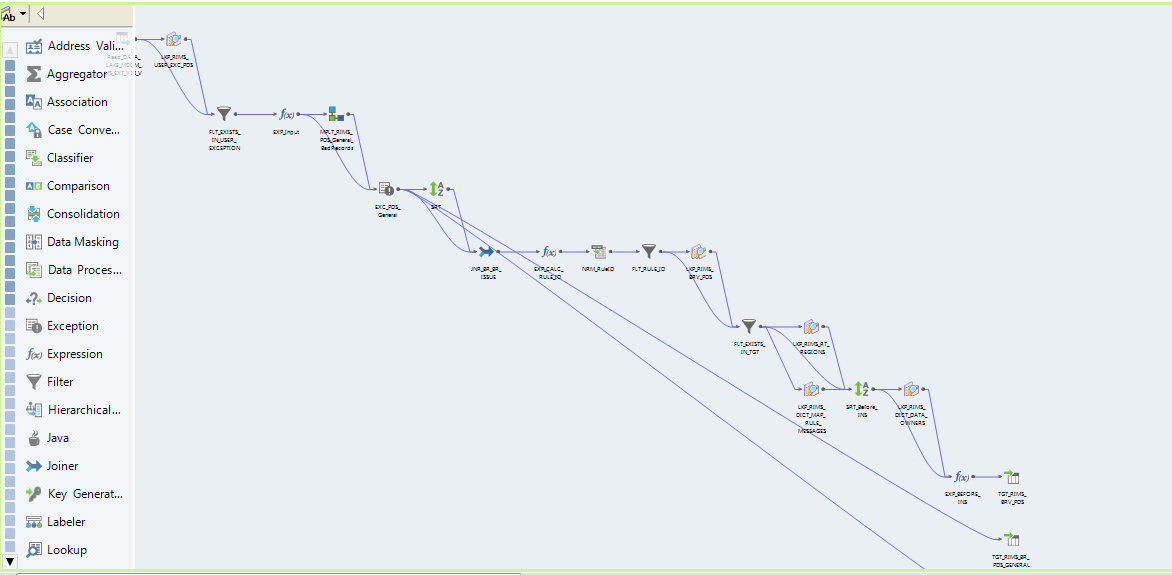

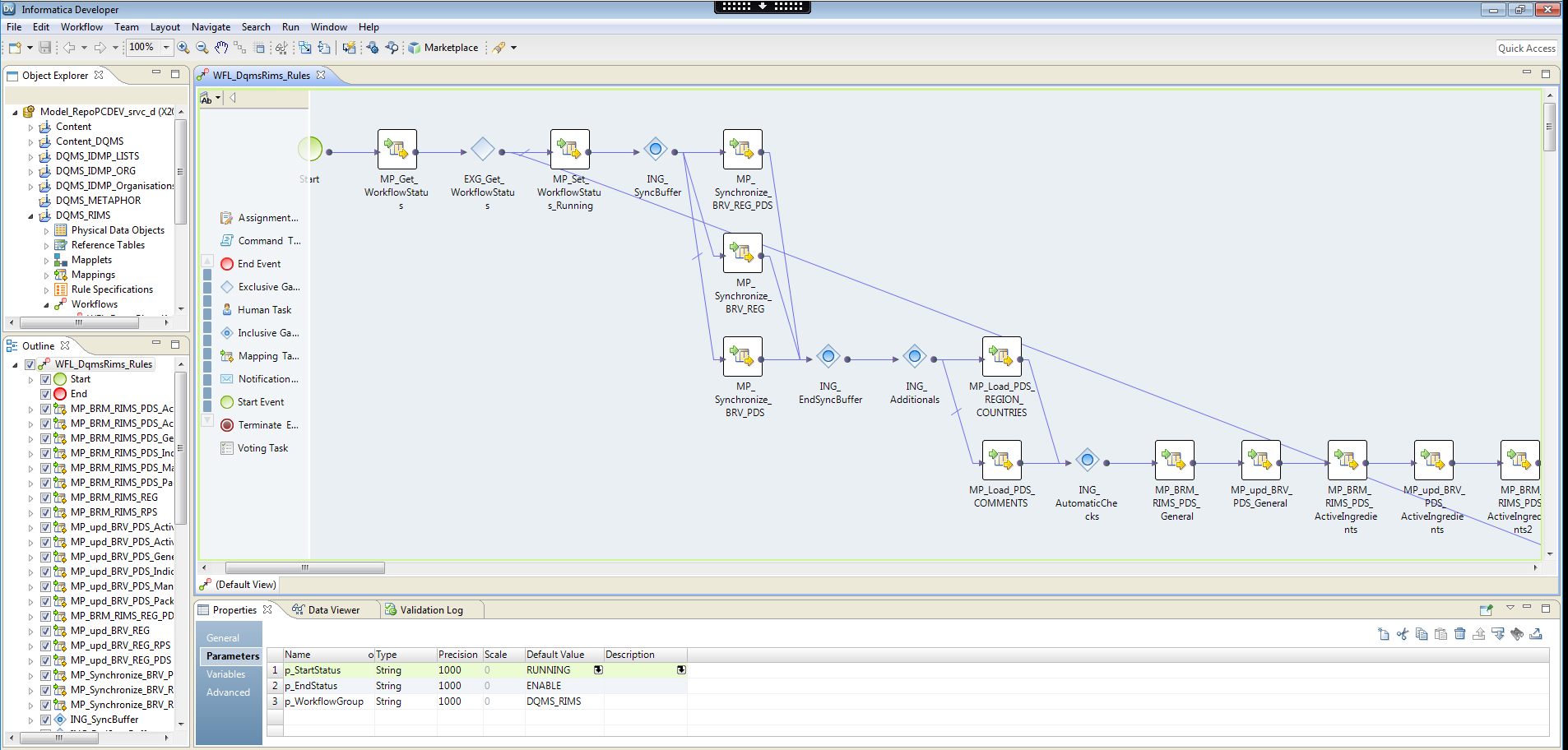

Subsequent steps of the data quality verification process are defined as a workflow that is designed and configured in the Informatica Developer tool. A workflow is a graphical representation of events, tasks, and decisions that constitute a business process. It’s worth mentioning that the tool allows users to define so called human tasks, that is, the actions that one or more users must perform manually on the data.

Fig. 3. Designing a mapping in Informatica Developer

Fig. 4. Designing a workflow in Informatica Developer

The system supports data profiling that allows to collect useful statistics or summaries on the quality of the examined data sets and helps in calculating KPIs.

Status of data quality activities is monitored and made accessible to stakeholders via the Data Quality Dashboard with KPIs, data quality reports etc. There are KPIs and metrics available for each critical regulatory activity: Key Compliance Data Indicator (KCI) is calculated for information impacting customer compliance, Key Quality Data Indicator (KQI) is calculated for KCI and additionally for information having significant impact on customer’s processes.

Data quality process in a nutshell

- Data sets are extracted from the RIMS database and delivered to the staging area by a dedicated interface.

- Informatica Data Quality (IDQ) engine retrieves the data sets from the staging area.

- Scheduled workflows run the mappings which apply appropriate quality rules to the relevant data sets. Informatica Data Quality identifies quality issues in source records. There are lower and upper score thresholds configured to classify records as “good” or “bad quality”.

- After the rule execution is completed, a list of data quality issues is generated. It is stored together with statistical information.

- Data quality verification results are delivered to data owners via a dedicated web page using K2 SmartForms to display relevant information and guidelines.

- Data owner responsible for a specific quality issue either corrects the affected records in the RIMS system, or marks the selected records as exceptions.

Summary of key functionalities

- The new solution is able to connect to different data sources, including: MS SQL databases, Oracle databases, SAP, MS SharePoint, MS Access, MS Excel, XML, JSON, Apache Hadoop, flat files etc.

- Support for data profiling.

- Definitions of data quality rules are flexible to cover texts, numbers, dates and other formats of data used in IT systems supporting different kind of checks, logical operators, checks against predefined dictionaries and controlled vocabularies, as well as combinations of several checks (e.g. country is within European Economic Area AND number of days > 30 AND ProductName is not null).

- The system supports versioning of data quality rules.

- The creation of data quality rules are supported via a graphical user interface and explanation of syntax.

- When designing a data quality rule, cooperation between business users and IT specialist is possible using Informatica Analyst and Informatica Developer tools.

- The system allows to reuse existing data quality rules. When a shared data quality rule is modified, the system can highlight where the rule is in use. So, it is possible to find all dependencies in few seconds.

- Each data quality rule have its unique identifier.

- One data owner must be assigned to each data quality rule. The data owner can be a role and depend on data within the data set (e.g. country).

- It is possible to define the following additional information for every data quality rule:

- explanation why the rule has failed

- description how the issue can be resolved

- reference and link to applicable guidance documentation

- criticality of the business rule — it can be used for calculation of various KPIs

- After applying data quality rules to selected data sets, the system logs all statistical information from the evaluation process, including which data set the data quality rule was applied to, when the rule was executed, and what is the result. Every data quality issue identified within each data set is further processed by the data owner who can mark selected issues as “data quality exceptions”.

- The system allows to indicate each issue whether it is already open or it is an exception and can be closed.

- All new issues are addressed to data owners as data quality incidents.

- It is possible to reassign single, all or selected data quality issues to a different data owner.

Results & Benefits

First of all, the main objective of the project has been fully achieved — the new solution made it possible to cleanse data in the existing RIMS system. After verifying all the source data, approximately 20,000 quality violations of various types and significance were identified. Each of them was assigned to an appropriate data owner who is analyzing the issue.

In addition, the new solution allows the customer to perform ongoing data quality checks in the RIMS system on a daily basis. All new quality violations are identified within 24h cycles that are fully automated. That way, all the records critical for the regulatory affairs are under constant quality monitoring.

During the project, multiple sets of data quality rules were designed and deployed by Striped Giraffe. These are universal and reusable company assets that may be applied in the future data quality-related initiatives. Moreover, after the new solution was released, the company has been constantly improving data quality tasks and developing new ones based on findings and conclusions from the current data quality checks.

It should be noted that a high level of automation in data quality management has been achieved. Since the process is no longer manual, data quality inspections can be carried out more frequently with greater efficiency and effectiveness. In addition, data quality cycle time was drastically shortened compared to manual data quality checks performed before the solution was implemented.